Data-driven Computer Vision (CV) tasks are still limited

by the amount of labeled data. Recently, some semantic

NeRFs have been proposed to render and synthesize

novel-view semantic labels. Although current NeRF methods

achieve spatially consistent color and semantic rendering,

the capability of the geometrical representation is limited.

This problem is caused by the lack of global information

among rays in the traditional NeRFs since they are

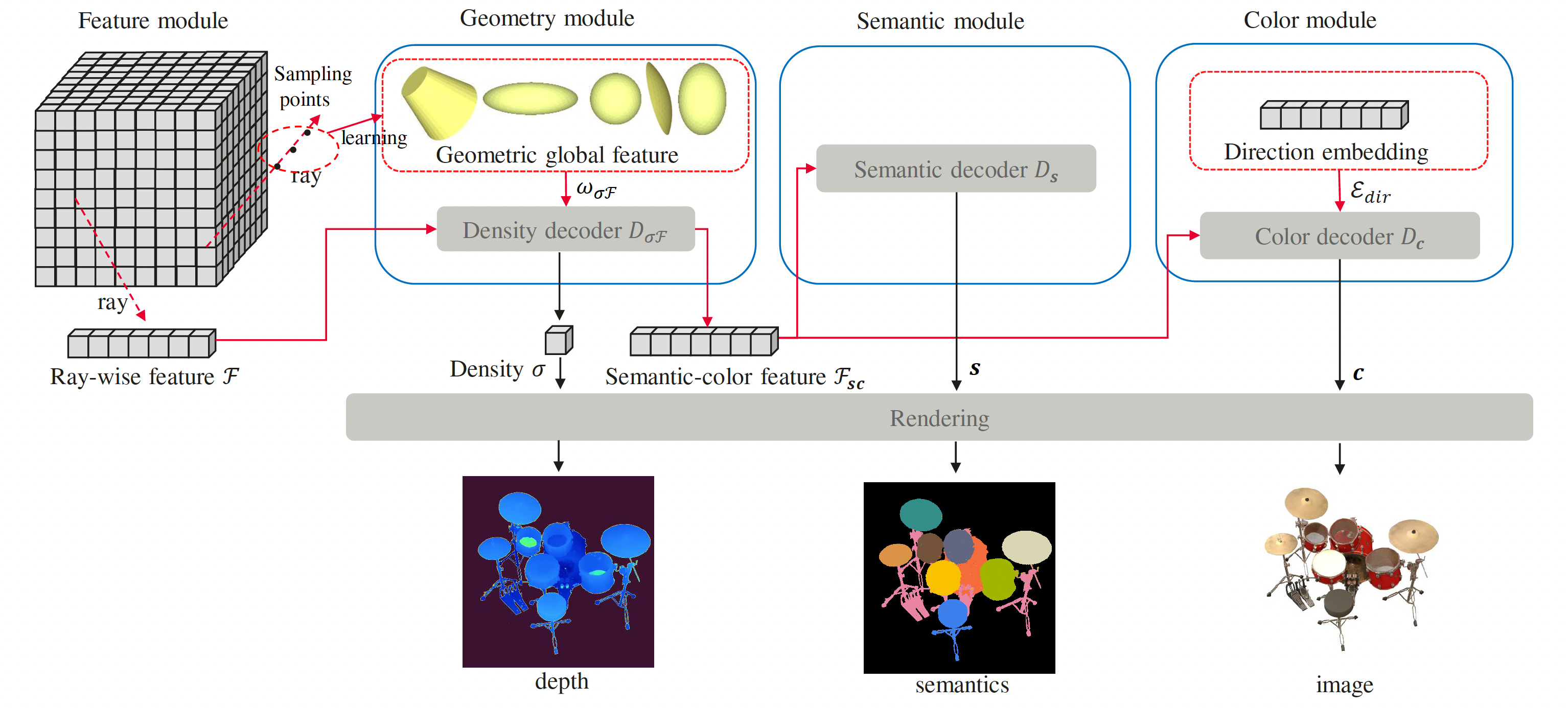

trained with independent directional rays. To address this

problem, we introduce the point-to-surface global feature

into NeRF to associate all rays, which enables the single

ray representation capability of global geometry. In particular,

the relative distance of each sampled ray point to the

learned global surfaces is calculated to weight the geometry

density and semantic-color feature. We also carefully

design the semantic loss and back-propagation function to

solve the problems of unbalanced samples and the disturbance

of implicit semantic field to geometric field. The experiments

validate the 3D scene annotation capability with

few feed labels. The quantification results show that our

method outperforms the state-of-the-art works in efficiency,

geometry, color and semantics on the public datasets. The

proposed method is also applied to multiple tasks, such

as indoor, outdoor, part segmentation labeling, texture rerendering

and robot simulation.